Memorylessness and Entropy

Any one who has delved into probability or stochastic will have come across the idea of a Markovian system1: one where each new state or value is dependent on the one that immediately precedes it. However, this is generally only ever examined in the contexts where it is known that the property holds, or that accepting the property is a valid approximation.

What I'd like to discuss today is what can happen if you examine a process with a limited amount of memory without out any knowledge of its properties, and the conclusions that follows, concluding with the similarities between finding models .

Consider then the time-variant random variable X, which is defined as:

This process, although contrived, has some interesting properties. Firstly, it is wide-sense stationary23. It does, however, clearly have some form of memory as every other item follows a high-low pattern.

However, the state space is only defined as a single bit. If we do the analysis considering it as such, either analytically4, or by looking at samples, an entirely different pattern emerges.

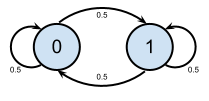

This gives us the state transition below, and a process that does not only satisfy the Markovian property, but is entirely memoryless.

From here on, thing get even better. The process described has gone from wide-sense stationary to being a truly stationary process5. Analytically, it also carries no redundant information — the standard test of bitwise Shannon entropy6 yields the following:

So, the overall information content is the same as the length of the transmission.

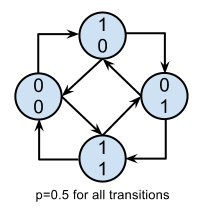

If we redo the analysis, this time taking:

giving the values of Y in the range 0 to 3. The state transition diagram is

shown (derivation omitted). In this case, the system has four states with

equal probability over the duration of a sample, still giving a Shannon

entropy of 1bit/bit. However, the transition is now dependent on the current

state, so we're back to the standard definition of the Markovian property.

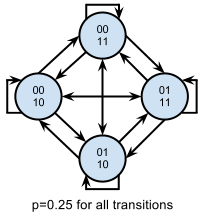

We can do it again taking four consecutive values. As we know that the first and third values must be opposite and consistent across each sample, we find that only four of the sixteen available states will occur7. The second and fourth values are truly random, and there is no dependence between the four-bit blocks. Thus we would arrive at state diagram where each of the four nodes is connected to all of the nodes, including itself.

This system is back to being entirely memoryless, but now has a lower

information efficiency. The Shannon entropy is then calculated as:

This is what one would expect when half of the bits are fixed.

If we were then to take n*4-bit samples, we'd be able to reach any combination of the 4-bit patterns repeated n times, giving 4n possibilities of equal probability, out of 16n possible

values. This allows us to derive a general case entropy:

Using this, we can plot a chart of the apparent entropy against the word size, allowing us to get a hint at the underlying pattern. We could augment this by looking at the other possibly values, those which are not powers of 2. Those of you with an interest in cryptography will have realised this is a slow replication of a Kasiski examination8 style of analysis on a Vigenère cipher9. This, I suppose, underlies the problem with attempting to model unknown systems: they are, in man respects, a cipher for reality.

- 1 ↑ http://en.wikipedia.org/wiki/Markov_property

- 2 ↑ http://en.wikipedia.org/wiki/Stationary_process#Weak_or_wide-sense_stationarity

- 3 ↑ The mean is a constant (0.5) and the auto correlation function depends only on the distance between the two samples.

- 4 ↑ Which is somewhat stupid as we're knowingly removing data.

- 5 ↑ http://en.wikipedia.org/wiki/Stationary_process#Weak_or_wide-sense_stationarity

- 6 ↑ http://en.wikipedia.org/wiki/Entropy_(information_theory)#Definition

- 7 ↑ That the first value is 0 is not an assumption, as we may simply define however the very fist bit is encoded as the representation of 0 in this system.

- 8 ↑ http://en.wikipedia.org/wiki/Kasiski_examination

- 9 ↑ http://en.wikipedia.org/wiki/Vigen%C3%A8re_cipher